[ad_1]

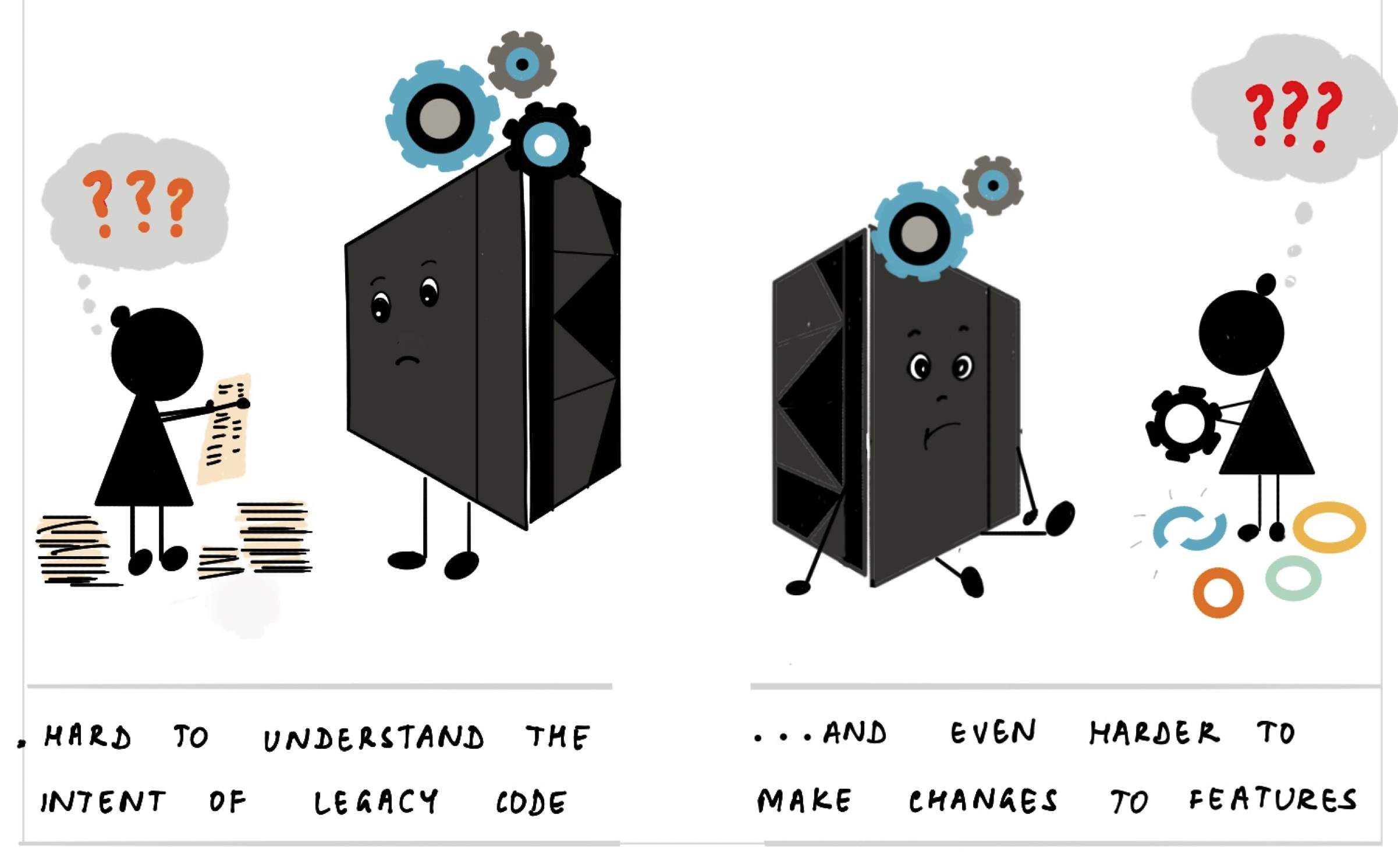

Gitanjali Venkatraman does fantastic illustrations of complicated topics (which is why I used to be so pleased to work together with her on our Knowledgeable Generalists article). She has now revealed the newest in her sequence of illustrated guides: tackling the complicated matter of Mainframe Modernization

In it she illustrates the historical past and worth of mainframes, why modernization is so difficult, and find out how to sort out the issue by breaking it down into tractable items. I like the readability of her explanations, and smile steadily at her method of enhancing her phrases together with her quirky photos.

❄ ❄ ❄ ❄ ❄

Gergely Orosz on social media

Unpopular opinion:

Present code assessment instruments simply don’t make a lot sense for AI-generated code

When reviewing code I actually wish to know:

- The immediate made by the dev

- What corrections the opposite dev made to the code

- Clear marking of code AI-generated not modified by a human

Some individuals pushed again saying they don’t (and shouldn’t care) whether or not it was written by a human, generated by an LLM, or copy-pasted from Stack Overflow.

In my opinion it issues so much – due to the second very important goal of code assessment.

When requested why do code opinions, most individuals will reply the primary very important goal – high quality management. We wish to guarantee unhealthy code will get blocked earlier than it hits mainline. We do that to keep away from bugs and to keep away from different high quality points, specifically comprehensibility and ease of change.

However I hear the second very important goal much less typically: code assessment is a mechanism to speak and educate. If I’m submitting some sub-standard code, and it will get rejected, I wish to know why in order that I can enhance my programming. Perhaps I’m unaware of some library options, or perhaps there’s some project-specific requirements I haven’t run into but, or perhaps my naming isn’t as clear as I believed it was. Regardless of the causes, I must know with a purpose to be taught. And my employer wants me to be taught, so I might be more practical.

We have to know the author of the code we assessment each so we are able to talk our higher follow to them, but additionally to know find out how to enhance issues. With a human, its a dialog, and maybe some documentation if we understand we’ve wanted to elucidate issues repeatedly. However with an LLM it’s about find out how to modify its context, in addition to people studying find out how to higher drive the LLM.

❄ ❄ ❄ ❄ ❄

Questioning why I’ve been making a number of posts like this just lately? I clarify why I’ve been reviving the hyperlink weblog.

❄ ❄ ❄ ❄ ❄

Simon Willison describes how he makes use of LLMs to construct disposable however helpful net apps

These are the traits I’ve discovered to be best in constructing instruments of this nature:

- A single file: inline JavaScript and CSS in a single HTML file means the least trouble in internet hosting or distributing them, and crucially means you may copy and paste them out of an LLM response.

- Keep away from React, or something with a construct step. The issue with React is that JSX requires a construct step, which makes all the pieces massively much less handy. I immediate “no react” and skip that entire rabbit gap totally.

- Load dependencies from a CDN. The less dependencies the higher, but when there’s a well-known library that helps remedy an issue I’m pleased to load it from CDNjs or jsdelivr or comparable.

- Preserve them small. A number of hundred strains means the maintainability of the code doesn’t matter an excessive amount of: any good LLM can learn them and perceive what they’re doing, and rewriting them from scratch with assist from an LLM takes just some minutes.

His repository consists of all these instruments, along with transcripts of the chats that received the LLMs to construct them.

❄ ❄ ❄ ❄ ❄

Obie Fernandez: whereas many engineers are underwhelmed by AI instruments, some senior engineers are discovering them actually beneficial. He feels that senior engineers have an oft-unspoken mindset, which along side an LLM, allows the LLM to be rather more beneficial.

Ranges of abstraction and generalization issues get talked about so much as a result of they’re straightforward to call. However they’re removed from the entire story.

Different instruments present up simply as typically in actual work:

- A way for blast radius. Figuring out which modifications are protected to make loudly and which must be quiet and contained.

- A really feel for sequencing. Figuring out when a technically right change remains to be fallacious as a result of the system or the workforce isn’t prepared for it but.

- An intuition for reversibility. Preferring strikes that hold choices open, even when they give the impression of being much less elegant within the second.

- An consciousness of social price. Recognizing when a intelligent resolution will confuse extra individuals than it helps.

- An allergy to false confidence. Recognizing locations the place exams are inexperienced however the mannequin is fallacious.

❄ ❄ ❄ ❄ ❄

Emil Stenström constructed an HTML5 parser in python utilizing coding brokers, utilizing Github Copilot in Agent mode with Claude Sonnet 3.7. He robotically accepted most instructions. It took him “a few months on off-hours”, together with at the least one restart from scratch. The parser now passes all of the exams in html5lib take a look at suite.

After writing the parser, I nonetheless don’t know HTML5 correctly. The agent wrote it for me. I guided it when it got here to API design and corrected unhealthy selections on the excessive degree, however it did ALL of the gruntwork and wrote the entire code.

I dealt with all git commits myself, reviewing code because it went in. I didn’t perceive all of the algorithmic decisions, however I understood when it didn’t do the proper factor.

Though he offers an outline of what occurs, there’s not very a lot info on his workflow and the way he interacted with the LLM. There’s definitely not sufficient element right here to attempt to replicate his strategy. That is distinction to Simon Willison (above) who has detailed hyperlinks to his chat transcripts – though they’re much smaller instruments and I haven’t checked out them correctly to see how helpful they’re.

One factor that’s clear, nonetheless, is the very important want for a complete take a look at suite. A lot of his work is pushed by having that suite as a transparent information for him and the LLM brokers.

JustHTML is about 3,000 strains of Python with 8,500+ exams passing. I couldn’t have written it this rapidly with out the agent.

However “rapidly” doesn’t imply “with out considering.” I spent a number of time reviewing code, making design selections, and steering the agent in the proper path. The agent did the typing; I did the considering.

❄ ❄

Then Simon Willison ported the library to JavaScript:

Time elapsed from venture concept to completed library: about 4 hours, throughout which I additionally purchased and adorned a Christmas tree with household and watched the newest Knives Out film.

Certainly one of his classes:

Should you can scale back an issue to a sturdy take a look at suite you may set a coding agent loop unfastened on it with a excessive diploma of confidence that it’s going to ultimately succeed. I known as this designing the agentic loop a number of months in the past. I feel it’s the important thing ability to unlocking the potential of LLMs for complicated duties.

Our expertise at Thoughtworks backs this up. We’ve been doing a good bit of labor just lately in legacy modernization (mainframe and in any other case) utilizing AI emigrate substantial software program techniques. Having a sturdy take a look at suite is critical (however not enough) to creating this work. I hope to share my colleagues’ experiences on this within the coming months.

However earlier than I go away Willison’s submit, I ought to spotlight his last open questions on the legalities, ethics, and effectiveness of all this – they’re well-worth considering.

[ad_2]