[ad_1]

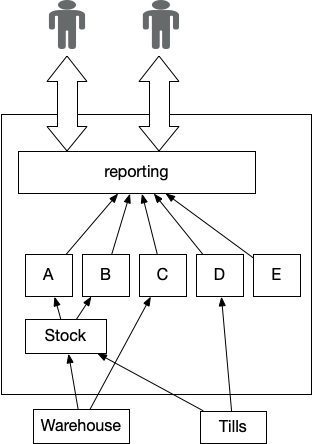

A standard characteristic of legacy programs is the Essential Aggregator,

because the title implies this produces info important to the working of a

enterprise and thus can’t be disrupted. Nonetheless in legacy this sample

virtually at all times devolves to an invasive extremely coupled implementation,

successfully freezing itself and upstream programs into place.

Determine 1: Reporting Essential Aggregator

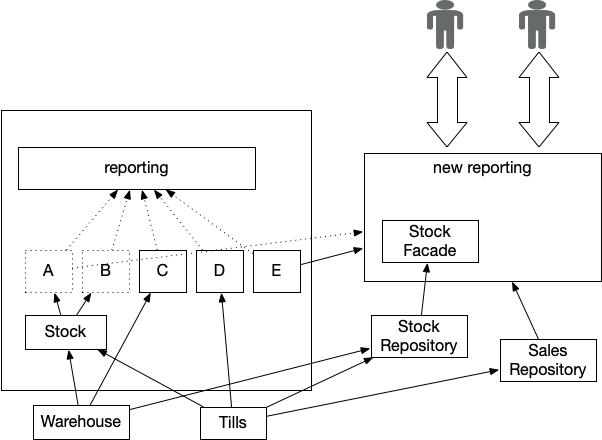

Divert the Move is a method that begins a Legacy Displacement initiative

by creating a brand new implementation of the Essential Aggregator

that, so far as attainable, is decoupled from the upstream programs that

are the sources of the info it must function. As soon as this new implementation

is in place we will disable the legacy implementation and therefore have

much more freedom to vary or relocate the assorted upstream knowledge sources.

Determine 2: Extracted Essential Aggregator

The choice displacement method when now we have a Essential Aggregator

in place is to depart it till final. We are able to displace the

upstream programs, however we have to use Legacy Mimic to

make sure the aggregator inside legacy continues to obtain the info it

wants.

Both possibility requires using a Transitional Structure, with

short-term elements and integrations required throughout the displacement

effort to both assist the Aggregator remaining in place, or to feed knowledge to the brand new

implementation.

How It Works

Diverting the Move creates a brand new implementation of a cross reducing

functionality, on this instance that being a Essential Aggregator.

Initially this implementation would possibly obtain knowledge from

current legacy programs, for instance through the use of the

Occasion Interception sample. Alternatively it could be less complicated

and extra worthwhile to get knowledge from supply programs themselves by way of

Revert to Supply. In observe we are inclined to see a

mixture of each approaches.

The Aggregator will change the info sources it makes use of as current upstream programs

and elements are themselves displaced from legacy,

thus it is dependency on legacy is decreased over time.

Our new Aggregator

implementation may make the most of alternatives to enhance the format,

high quality and timeliness of knowledge

as supply programs are migrated to new implementations.

Map knowledge sources

If we’re going to extract and re-implement a Essential Aggregator

we first want to know how it’s linked to the remainder of the legacy

property. This implies analyzing and understanding

the last word supply of knowledge used for the aggregation. It is vital

to recollect right here that we have to get to the last word upstream system.

For instance

whereas we’d deal with a mainframe, say, because the supply of fact for gross sales

info, the info itself would possibly originate in in-store until programs.

Making a diagram displaying the

aggregator alongside the upstream and downstream dependencies

is vital.

A system context diagram, or related, can work properly right here; now we have to make sure we

perceive precisely what knowledge is flowing from which programs and the way

usually. It’s normal for legacy options to be

an information bottleneck: further helpful knowledge from (newer) supply programs is

usually discarded because it was too tough to seize or signify

in legacy. Given this we additionally must seize which upstream supply

knowledge is being discarded and the place.

Consumer necessities

Clearly we have to perceive how the potential we plan to “divert”

is utilized by finish customers. For Essential Aggregator we frequently

have a really massive mixture of customers for every report or metric. It is a

traditional instance of the place Function Parity can lead

to rebuilding a set of “bloated” studies that actually do not meet present

person wants. A simplified set of smaller studies and dashboards would possibly

be a greater resolution.

Parallel working could be vital to make sure that key numbers match up

throughout the preliminary implementation,

permitting the enterprise to fulfill themselves issues work as anticipated.

Seize how outputs are produced

Ideally we wish to seize how present outputs are produced.

One approach is to make use of a sequence diagram to doc the order of

knowledge reception and processing within the legacy system, and even only a

stream chart.

Nonetheless there are

usually diminishing returns in attempting to totally seize the present

implementation, it commonplace to seek out that key data has been

misplaced. In some circumstances the legacy code could be the one

“documentation” for the way issues work and understanding this could be

very tough or pricey.

One writer labored with a consumer who used an export

from a legacy system alongside a extremely complicated spreadsheet to carry out

a key monetary calculation. Nobody at the moment on the group knew

how this labored, fortunately we had been put in contact with a not too long ago retired

worker. Sadly after we spoke to them it turned out they’d

inherited the spreadsheet from a earlier worker a decade earlier,

and sadly this individual had handed away some years in the past. Reverse engineering the

legacy report and (twice ‘model migrated’) excel spreadsheet was extra

work than going again to first ideas and defining from recent what

the calculation ought to do.

Whereas we might not be constructing to characteristic parity within the

alternative finish level we nonetheless want key outputs to ‘agree’ with legacy.

Utilizing our aggregation instance we’d

now have the ability to produce hourly gross sales studies for shops, nevertheless enterprise

leaders nonetheless

want the tip of month totals and these must correlate with any

current numbers.

We have to work with finish customers to create labored examples

of anticipated outputs for given take a look at inputs, this may be important for recognizing

which system, outdated or new, is ‘appropriate’ in a while.

Supply and Testing

We have discovered this sample lends itself properly to an iterative method

the place we construct out the brand new performance in slices. With Essential

Aggregator

this implies delivering every report in flip, taking all of them the best way

by means of to a manufacturing like atmosphere. We are able to then use

Parallel Working

to watch the delivered studies as we construct out the remaining ones, in

addition to having beta customers giving early suggestions.

Our expertise is that many legacy studies include undiscovered points

and bugs. This implies the brand new outputs hardly ever, if ever, match the present

ones. If we do not perceive the legacy implementation totally it is usually

very arduous to know the reason for the mismatch.

One mitigation is to make use of automated testing to inject recognized knowledge and

validate outputs all through the implementation section. Ideally we might

do that with each new and legacy implementations so we will examine

outputs for a similar set of recognized inputs. In observe nevertheless attributable to

availability of legacy take a look at environments and complexity of injecting knowledge

we frequently simply do that for the brand new system, which is our advisable

minimal.

It’s normal to seek out “off system” workarounds in legacy aggregation,

clearly it is essential to attempt to monitor these down throughout migration

work.

The commonest instance is the place the studies

wanted by the management crew will not be truly accessible from the legacy

implementation, so somebody manually manipulates the studies to create

the precise outputs they

see – this usually takes days. As no-one desires to inform management the

reporting does not truly work they usually stay unaware that is

how actually issues work.

Go Stay

As soon as we’re pleased performance within the new aggregator is appropriate we will divert

customers in direction of the brand new resolution, this may be finished in a staged trend.

This would possibly imply implementing studies for key cohorts of customers,

a interval of parallel working and at last reducing over to them utilizing the

new studies solely.

Monitoring and Alerting

Having the proper automated monitoring and alerting in place is important

for Divert the Move, particularly when dependencies are nonetheless in legacy

programs. It’s essential to monitor that updates are being acquired as anticipated,

are inside recognized good bounds and likewise that finish outcomes are inside

tolerance. Doing this checking manually can rapidly change into lots of work

and may create a supply of error and delay going forwards.

Basically we advocate fixing any knowledge points discovered within the upstream programs

as we wish to keep away from re-introducing previous workarounds into our

new resolution. As an additional security measure we will depart the Parallel Working

in place for a interval and with selective use of reconciliation instruments, generate an alert if the outdated and new

implementations begin to diverge too far.

When to Use It

This sample is most helpful when now we have cross reducing performance

in a legacy system that in flip has “upstream” dependencies on different elements

of the legacy property. Essential Aggregator is the commonest instance. As

an increasing number of performance will get added over time these implementations can change into

not solely enterprise crucial but additionally massive and sophisticated.

An usually used method to this case is to depart migrating these “aggregators”

till final since clearly they’ve complicated dependencies on different areas of the

legacy property.

Doing so creates a requirement to maintain legacy up to date with knowledge and occasions

as soon as we being the method of extracting the upstream elements. In flip this

signifies that till we migrate the “aggregator” itself these new elements stay

to a point

coupled to legacy knowledge constructions and replace frequencies. We even have a big

(and sometimes essential) set of customers who see no enhancements in any respect till close to

the tip of the general migration effort.

Diverting the Move affords a substitute for this “depart till the tip” method,

it may be particularly helpful the place the price and complexity of constant to

feed the legacy aggregator is critical, or the place corresponding enterprise

course of modifications means studies, say, should be modified and tailored throughout

migration.

Enhancements in replace frequency and timeliness of knowledge are sometimes key

necessities for legacy modernisation

tasks. Diverting the Move provides a chance to ship

enhancements to those areas early on in a migration undertaking,

particularly if we will apply

Revert to Supply.

Knowledge Warehouses

We frequently come throughout the requirement to “assist the Knowledge Warehouse”

throughout a legacy migration as that is the place the place key studies (or related) are

truly generated. If it seems the DWH is itself a legacy system then

we will “Divert the Move” of knowledge from the DHW to some new higher resolution.

Whereas it may be attainable to have new programs present an equivalent feed

into the warehouse care is required as in observe we’re as soon as once more coupling our new programs

to the legacy knowledge format together with it is attendant compromises, workarounds and, very importantly,

replace frequencies. We’ve

seen organizations substitute important parts of legacy property however nonetheless be caught

working a enterprise on outdated knowledge attributable to dependencies and challenges with their DHW

resolution.

This web page is a part of:

Patterns of Legacy Displacement

Most important Narrative Article

Patterns

[ad_2]